Combining task- and data-level parallelism for high-throughput CNN inference on embedded MPSoCs

Svetlana Minakova, Erqian Tang and Todor Stefanov

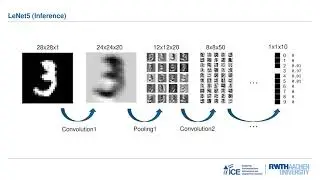

Nowadays Convolutional Neural Networks (CNNs) are widely used to perform various tasks in areas such as computer vision or natural language processing. Some of the CNN applications require high-throughput execution of the CNN inference, on embedded devices, and many modern embedded devices are based on CPUs-GPUs multi-processor systems-on-chip (MPSoCs). Ensuring high-throughput execution of the CNN inference on embedded CPUs-GPUs MPSoCs is a complex task, which requires efficient utilization of both task-level (pipeline) and data-level parallelism, available in a CNN. However, the existing Deep Learning frameworks utilize only task-level (pipeline) or only data-level parallelism, available in a CNN, and do not take full advantage of all embedded MPSoC computational resources. Therefore, in this paper, we propose a novel methodology for efficient execution of the CNN inference on embedded CPUsGPUs MPSoCs. In our methodology, we ensure efficient utilization of both tasklevel (pipeline) and data-level parallelism, available in a CNN, to achieve highthroughput execution of the CNN inference on embedded CPUs-GPUs MPSoCs.