‘On Neural Computing and Architectures‘ by Nikitas Dimopoulos

Stamatis Vassiliadis Symposium

Title: On Neural Computing and Architectures

Speaker: Nikitas Dimopoulos, University of Victoria, Canada

Slides: http://samos-conference.com/Resources...

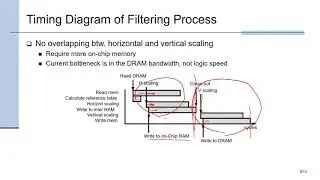

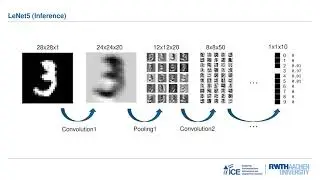

Abstract: Computational Neuroscience explores brain function in terms of the structures that make up the nervous system through mathematical modeling, simulation and the analysis of experimental data. As a discipline, it differs from machine learning, and neural networks although all cross-fertilize each other. The main difference is that Computational Neuroscience strives to develop models that are as close as possible to the observed physiology and structures, while neural networks focusing on learning, develop models that may not be physiologically plausible. In this talk we shall present some of the standard neuron models and our experience using these to model complex structures such as regions of the hippocampus. We shall show the performance and energy requirements of these models on current processors. We shall consider the scalability of the current models towards more complex structures and eventually whole brain simulations and determine that current processors cannot be used to scale these computations. We shall survey efforts in developing architectures that hold the promise of scalability and identify areas of future research.

![SLAP HOUSE MAFIA, DKSH, FLOW - BALENCIAGA (REMIX) [NO COPYRIGHT] Car Music 2021](https://images.mixrolikus.cc/video/eJIinxdOOZI)