Spark: Data Science as a Service: Spark Summit East talk by Shekhar Agrawal and Sridhar Alla

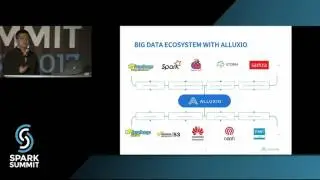

Almost all organizations now have a need for datascience and as such the main challenge after determining the algorithm is to scale it up and make it operational. We at comcast use several tools and technologies such as Python, R, SaS, H2O and so on.

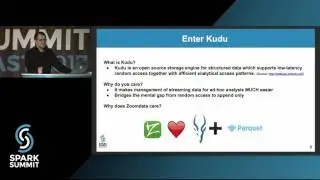

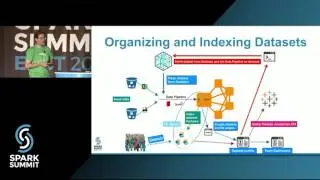

In this talk we will show how many common use cases use the common algorithms like Logistic Regression, Random Forest, Decision Trees , Clustering, NLP etc.

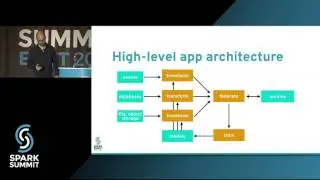

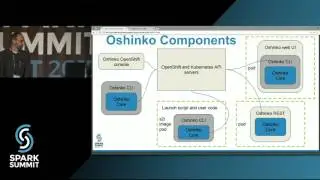

Spark has several Machine Learning algorithms built in and has excellent scalability. Hence we at comcast built a platform to provide DSaaS on top of Spark with REST API as a means of controlling and submitting jobs so as to abstract most users from the rigor of writing(repeating ) code instead focusing on the actual requirements. We will show how we solved some of the problems of establishing feature vectors, choosing algorithms and then deploying models into production.

We will showcase our use of Scala, R and Python to implement models using language of choice yet deploying quickly into production on 500 node Spark clusters.