Data Tools and Technologies in data engineering

Data engineers use a variety of tools and technologies to build and maintain data pipelines, data warehouses, and other data infrastructure. Some of the most popular data tools and technologies used in data engineering include:

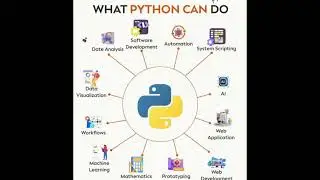

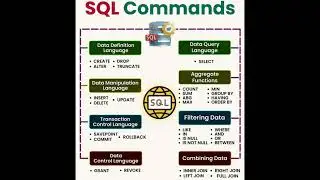

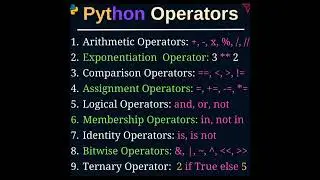

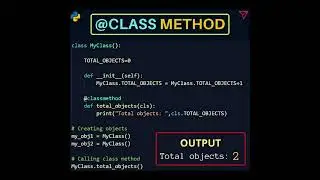

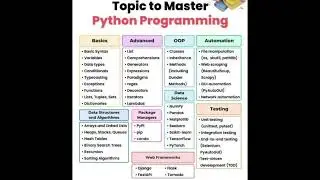

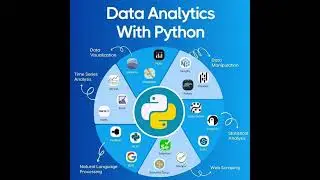

Programming languages: Python and SQL are the two most popular programming languages used in data engineering. Python is a general-purpose language that is well-suited for data manipulation, analysis, and visualization. SQL is a database query language that is used to extract and transform data in databases.

Data warehousing and big data processing: Apache Spark is a popular open-source unified analytics engine for large-scale data processing. It can be used to process both structured and unstructured data from a variety of sources. Apache Hive is another popular open-source tool that is used to query and analyze large datasets stored in Hadoop Distributed File System (HDFS).

Data storage: Cloud data warehouses like Amazon Redshift and Google BigQuery are popular choices for storing and analyzing large datasets. They offer high scalability and performance, and they can be accessed from anywhere in the world.

Data orchestration: Apache Airflow is a popular open-source workflow management platform that is used to automate the execution of data pipelines. It allows data engineers to define and schedule complex data processing workflows.

Data visualization and business intelligence (BI): Tableau, Power BI, and Looker are popular BI tools that allow data engineers and analysts to create interactive dashboards, reports, and visualizations to explore and analyze data.

In addition to these general-purpose tools and technologies, data engineers also use a variety of specialized tools for specific tasks, such as data quality assurance, data governance, and machine learning.

Here are some examples of how data tools and technologies are used in data engineering:

Python: Python is used to write data pipelines, transform data, and perform data analysis. It is also used to develop machine learning models.

SQL: SQL is used to query and transform data in databases. It is also used to create data warehouses and data lakes.

Apache Spark: Apache Spark is used to process large datasets from a variety of sources. It is also used to develop machine learning models.

Apache Hive: Apache Hive is used to query and analyze large datasets stored in HDFS.

Amazon Redshift: Amazon Redshift is used to store and analyze large datasets in the cloud.

Google BigQuery: Google BigQuery is used to store and analyze large datasets in the cloud.

Apache Airflow: Apache Airflow is used to automate the execution of data pipelines.

Tableau: Tableau is used to create interactive dashboards, reports, and visualizations to explore and analyze data.

Power BI: Power BI is used to create interactive dashboards, reports, and visualizations to explore and analyze data.

Looker: Looker is used to create interactive dashboards, reports, and visualizations to explore and analyze data.

Data tools and technologies are constantly evolving, and new tools are emerging all the time. Data engineers need to stay up-to-date on the latest trends and technologies in order to be effective in their roles.

![Mangiatori Urlanti 2 - [Candid Camera] - Divergents](https://images.mixrolikus.cc/video/QFh-Wx02CE0)